Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Amazon | GPU parallel computing for machine learning in Python: how to build a parallel computer | Takefuji, Yoshiyasu | Neural Networks

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

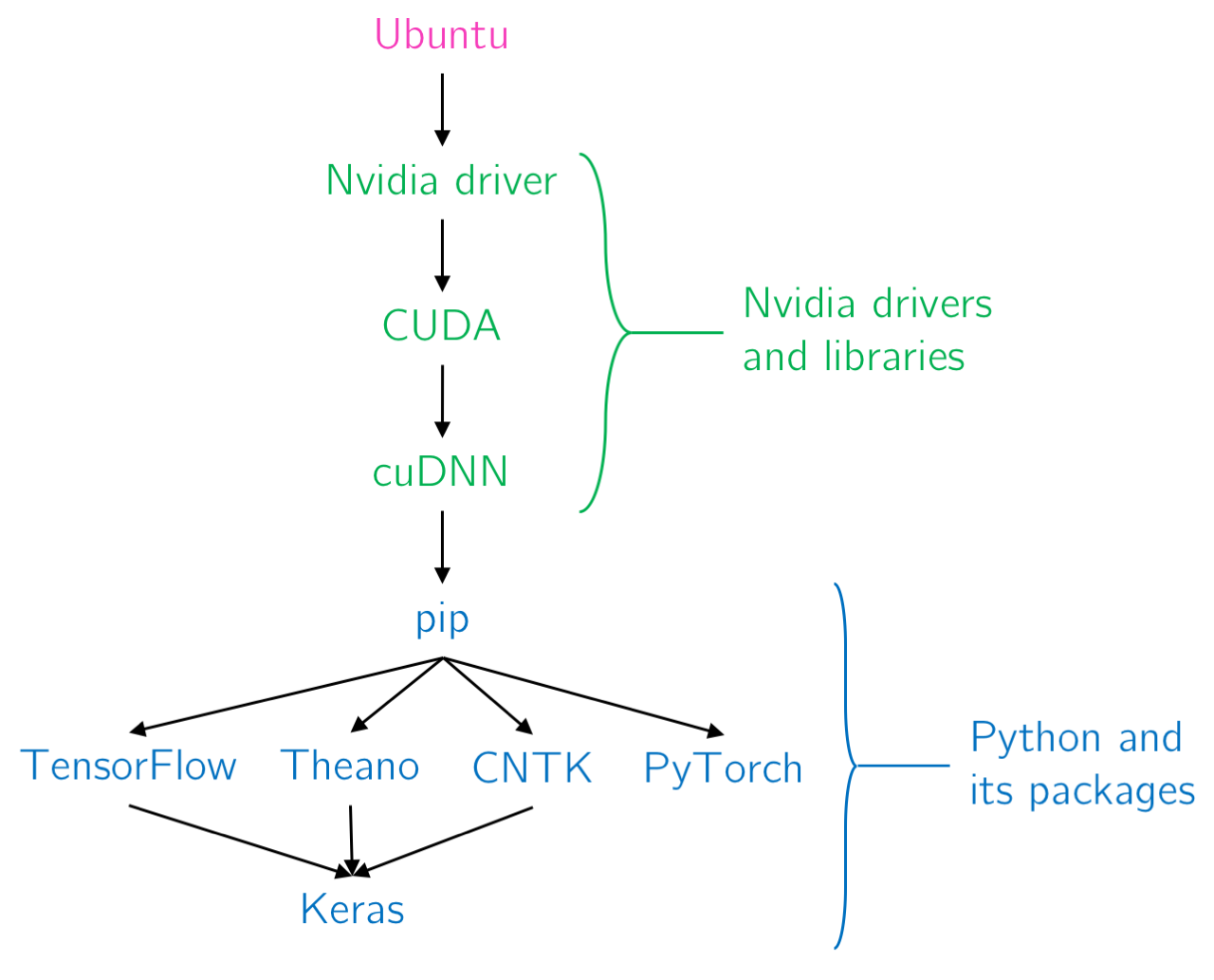

Build your own Robust Deep Learning Environment in Minutes | by Dipanjan (DJ) Sarkar | Towards Data Science

Hands-On GPU Computing with Python (Paperback) - Walmart.com in 2022 | Data science learning, Distributed computing, Computer